[ Download | Git repository | Matrix channel | Drone CI ]

Data resiliency for everyone

Garage is an open-source distributed storage service you can self-host to fullfill many needs:

⮞ learn more about use cases ⮜

Garage implements the Amazon S3 API and thus is already compatible with many applications:

⮞ learn more about integrations ⮜

Garage provides data resiliency by replicating data 3x over distant servers:

⮞ learn more about our design ⮜

Did you notice that this website is hosted and served by Garage?

Keeping requirements low

We worked hard to keep requirements as low as possible as we target the largest possible public.

- CPU: any x86_64 CPU from the last 10 years, ARMv7 or ARMv8.

- RAM: 1GB

- Disk Space: at least 16GB

- Network: 200ms or less, 50 Mbps or more

- Heterogeneous hardware: build a cluster with whatever second-hand machines are available

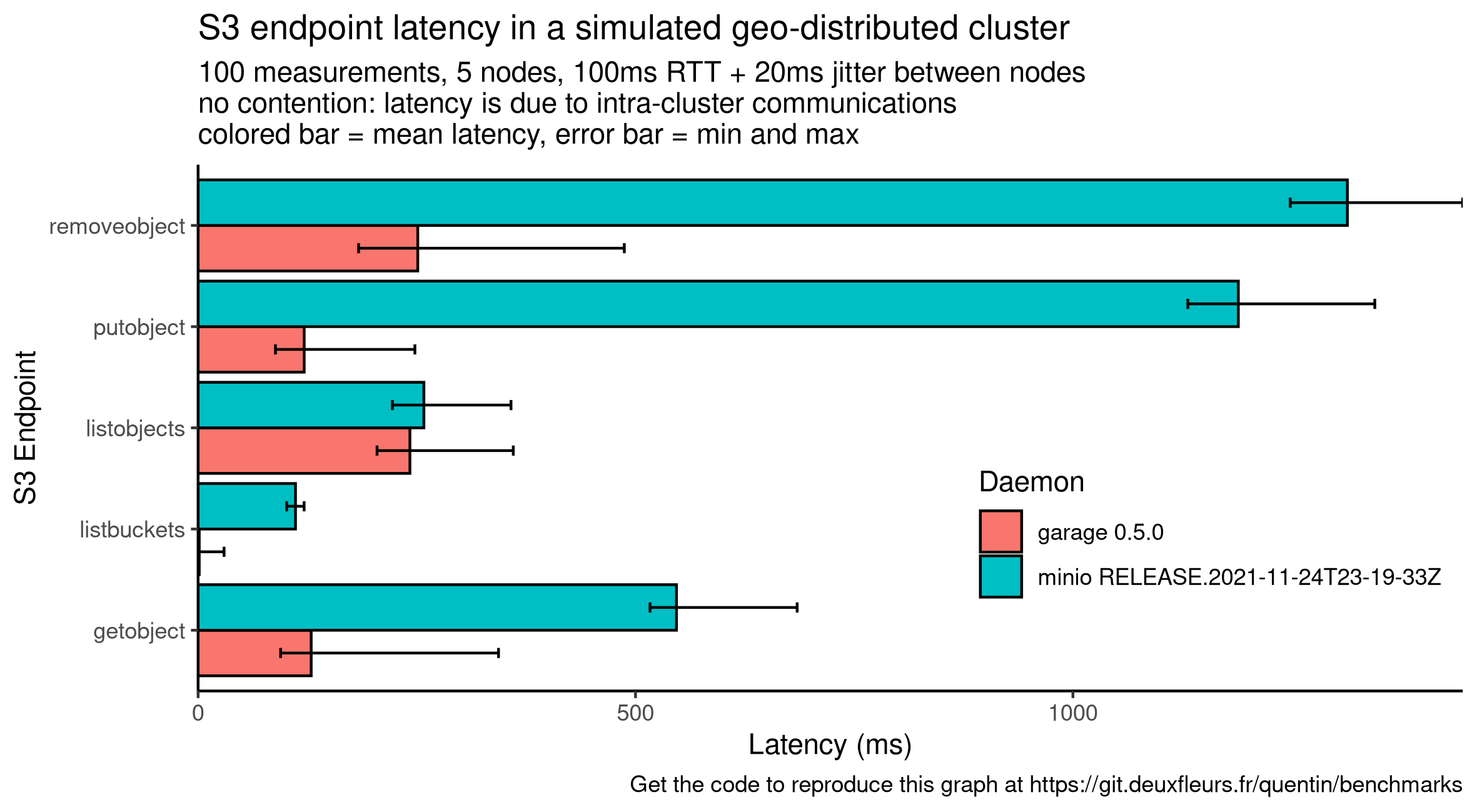

For the network, as we do not use consensus algorithms like Paxos or Raft, Garage is not as latency sensitive. Thanks to Rust and its zero-cost abstractions, we keep CPU and memory low.

Built on the shoulder of giants

- Dynamo: Amazon’s Highly Available Key-value Store by DeCandia et al.

- Conflict-Free Replicated Data Types by Shapiro et al.

- Maglev: A Fast and Reliable Software Network Load Balancer by Eisenbud et al.

Talks

-

(fr, 2021-11-13, video) Garage : Mille et une façons de stocker vos données and slides (html) - during RFID#1 event

-

(en, 2021-04-28, pdf) Distributed object storage is centralised

-

(fr, 2020-12-02, pdf) Garage : jouer dans la cour des grands quand on est un hébergeur associatif

Community

If you want to discuss with us, you can join our Matrix channel at #garage:deuxfleurs.fr. Our code repository and issue tracker, which is the place where you should report bugs, is managed on Deuxfleurs' Gitea.

License

Garage's source code, is released under the AGPL v3 License. Please note that if you patch Garage and then use it to provide any service over a network, you must share your code!

Sponsors and funding

The Deuxfleurs association has received a grant from NGI POINTER, to fund 3 people working on Garage full-time for a year: from October 2021 to September 2022.

This project has received funding from the European Union’s Horizon 2020 research and innovation programme within the framework of the NGI-POINTER Project funded under grant agreement N° 871528.

Quick Start

Let's start your Garage journey! In this chapter, we explain how to deploy Garage as a single-node server and how to interact with it.

Our goal is to introduce you to Garage's workflows. Following this guide is recommended before moving on to configuring a multi-node cluster.

Note that this kind of deployment should not be used in production, as it provides no redundancy for your data!

Get a binary

Download the latest Garage binary from the release pages on our repository:

https://garagehq.deuxfleurs.fr/_releases.html

Place this binary somewhere in your $PATH so that you can invoke the garage

command directly (for instance you can copy the binary in /usr/local/bin

or in ~/.local/bin).

If a binary of the last version is not available for your architecture, or if you want a build customized for your system, you can build Garage from source.

Writing a first configuration file

This first configuration file should allow you to get started easily with the simplest

possible Garage deployment.

Save it as /etc/garage.toml.

You can also store it somewhere else, but you will have to specify -c path/to/garage.toml

at each invocation of the garage binary (for example: garage -c ./garage.toml server, garage -c ./garage.toml status).

metadata_dir = "/tmp/meta"

data_dir = "/tmp/data"

replication_mode = "none"

rpc_bind_addr = "[::]:3901"

rpc_public_addr = "127.0.0.1:3901"

rpc_secret = "1799bccfd7411eddcf9ebd316bc1f5287ad12a68094e1c6ac6abde7e6feae1ec"

bootstrap_peers = []

[s3_api]

s3_region = "garage"

api_bind_addr = "[::]:3900"

root_domain = ".s3.garage"

[s3_web]

bind_addr = "[::]:3902"

root_domain = ".web.garage"

index = "index.html"

The rpc_secret value provided above is just an example. It will work, but in

order to secure your cluster you will need to use another one. You can generate

such a value with openssl rand -hex 32.

As you can see in the metadata_dir and data_dir parameters, we are saving Garage's data

in /tmp which gets erased when your system reboots. This means that data stored on this

Garage server will not be persistent. Change these to locations on your local disk if you want

your data to be persisted properly.

Launching the Garage server

Use the following command to launch the Garage server with our configuration file:

RUST_LOG=garage=info garage server

You can tune Garage's verbosity as follows (from less verbose to more verbose):

RUST_LOG=garage=info garage server

RUST_LOG=garage=debug garage server

RUST_LOG=garage=trace garage server

Log level info is recommended for most use cases.

Log level debug can help you check why your S3 API calls are not working.

Checking that Garage runs correctly

The garage utility is also used as a CLI tool to configure your Garage deployment.

It uses values from the TOML configuration file to find the Garage daemon running on the

local node, therefore if your configuration file is not at /etc/garage.toml you will

again have to specify -c path/to/garage.toml.

If the garage CLI is able to correctly detect the parameters of your local Garage node,

the following command should be enough to show the status of your cluster:

garage status

This should show something like this:

==== HEALTHY NODES ====

ID Hostname Address Tag Zone Capacity

563e1ac825ee3323… linuxbox 127.0.0.1:3901 NO ROLE ASSIGNED

Creating a cluster layout

Creating a cluster layout for a Garage deployment means informing Garage of the disk space available on each node of the cluster as well as the zone (e.g. datacenter) each machine is located in.

For our test deployment, we are using only one node. The way in which we configure it does not matter, you can simply write:

garage layout assign -z dc1 -c 1 <node_id>

where <node_id> corresponds to the identifier of the node shown by garage status (first column).

You can enter simply a prefix of that identifier.

For instance here you could write just garage layout assign -z dc1 -c 1 563e.

The layout then has to be applied to the cluster, using:

garage layout apply

Creating buckets and keys

In this section, we will suppose that we want to create a bucket named nextcloud-bucket

that will be accessed through a key named nextcloud-app-key.

Don't forget that help command and --help subcommands can help you anywhere,

the CLI tool is self-documented! Two examples:

garage help

garage bucket allow --help

Create a bucket

Let's take an example where we want to deploy NextCloud using Garage as the main data storage.

First, create a bucket with the following command:

garage bucket create nextcloud-bucket

Check that everything went well:

garage bucket list

garage bucket info nextcloud-bucket

Create an API key

The nextcloud-bucket bucket now exists on the Garage server,

however it cannot be accessed until we add an API key with the proper access rights.

Note that API keys are independent of buckets: one key can access multiple buckets, multiple keys can access one bucket.

Create an API key using the following command:

garage key new --name nextcloud-app-key

The output should look as follows:

Key name: nextcloud-app-key

Key ID: GK3515373e4c851ebaad366558

Secret key: 7d37d093435a41f2aab8f13c19ba067d9776c90215f56614adad6ece597dbb34

Authorized buckets:

Check that everything works as intended:

garage key list

garage key info nextcloud-app-key

Allow a key to access a bucket

Now that we have a bucket and a key, we need to give permissions to the key on the bucket:

garage bucket allow \

--read \

--write \

nextcloud-bucket \

--key nextcloud-app-key

You can check at any time the allowed keys on your bucket with:

garage bucket info nextcloud-bucket

Uploading and downlading from Garage

We recommend the use of MinIO Client to interact with Garage files (mc).

Instructions to install it and use it are provided on the

MinIO website.

Before reading the following, you need a working mc command on your path.

Note that on certain Linux distributions such as Arch Linux, the Minio client binary

is called mcli instead of mc (to avoid name clashes with the Midnight Commander).

Configure mc

You need your access key and secret key created above.

We will assume you are invoking mc on the same machine as the Garage server,

your S3 API endpoint is therefore http://127.0.0.1:3900.

For this whole configuration, you must set an alias name: we chose my-garage, that you will used for all commands.

Adapt the following command accordingly and run it:

mc alias set \

my-garage \

http://127.0.0.1:3900 \

<access key> \

<secret key> \

--api S3v4

You must also add an environment variable to your configuration to

inform MinIO of our region (garage by default, corresponding to the s3_region parameter

in the configuration file).

The best way is to add the following snippet to your $HOME/.bash_profile

or $HOME/.bashrc file:

export MC_REGION=garage

Use mc

You can not list buckets from mc currently.

But the following commands and many more should work:

mc cp image.png my-garage/nextcloud-bucket

mc cp my-garage/nextcloud-bucket/image.png .

mc ls my-garage/nextcloud-bucket

mc mirror localdir/ my-garage/another-bucket

Other tools for interacting with Garage

The following tools can also be used to send and recieve files from/to Garage:

Refer to the "Integrations" section to learn how to configure application and command line utilities to integrate with Garage.

Cookbook

A cookbook, when you cook, is a collection of recipes. Similarly, Garage's cookbook contains a collection of recipes that are known to works well! This chapter could also be referred as "Tutorials" or "Best practices".

-

Multi-node deployment: This page will walk you through all of the necessary steps to deploy Garage in a real-world setting.

-

Building from source: This page explains how to build Garage from source in case a binary is not provided for your architecture, or if you want to hack with us!

-

Integration with Systemd: This page explains how to run Garage as a Systemd service (instead of as a Docker container).

-

Configuring a gateway node: This page explains how to run a gateway node in a Garage cluster, i.e. a Garage node that doesn't store data but accelerates access to data present on the other nodes.

-

Hosting a website: This page explains how to use Garage to host a static website.

-

Configuring a reverse-proxy: This page explains how to configure a reverse-proxy to add TLS support to your S3 api endpoint.

-

Recovering from failures: Garage's first selling point is resilience to hardware failures. This section explains how to recover from such a failure in the best possible way.

Deploying Garage on a real-world cluster

To run Garage in cluster mode, we recommend having at least 3 nodes. This will allow you to setup Garage for three-way replication of your data, the safest and most available mode proposed by Garage.

We recommend first following the quick start guide in order to get familiar with Garage's command line and usage patterns.

Prerequisites

To run a real-world deployment, make sure the following conditions are met:

-

You have at least three machines with sufficient storage space available.

-

Each machine has a public IP address which is reachable by other machines. Running behind a NAT is likely to be possible but hasn't been tested for the latest version (TODO).

-

Ideally, each machine should have a SSD available in addition to the HDD you are dedicating to Garage. This will allow for faster access to metadata and has the potential to drastically reduce Garage's response times.

-

This guide will assume you are using Docker containers to deploy Garage on each node. Garage can also be run independently, for instance as a Systemd service. You can also use an orchestrator such as Nomad or Kubernetes to automatically manage Docker containers on a fleet of nodes.

Before deploying Garage on your infrastructure, you must inventory your machines. For our example, we will suppose the following infrastructure with IPv6 connectivity:

| Location | Name | IP Address | Disk Space |

|---|---|---|---|

| Paris | Mercury | fc00:1::1 | 1 To |

| Paris | Venus | fc00:1::2 | 2 To |

| London | Earth | fc00:B::1 | 2 To |

| Brussels | Mars | fc00:F::1 | 1.5 To |

Get a Docker image

Our docker image is currently named dxflrs/amd64_garage and is stored on the Docker Hub.

We encourage you to use a fixed tag (eg. v0.4.0) and not the latest tag.

For this example, we will use the latest published version at the time of the writing which is v0.4.0 but it's up to you

to check the most recent versions on the Docker Hub.

For example:

sudo docker pull dxflrs/amd64_garage:v0.4.0

Deploying and configuring Garage

On each machine, we will have a similar setup, especially you must consider the following folders/files:

-

/etc/garage.toml: Garage daemon's configuration (see below) -

/var/lib/garage/meta/: Folder containing Garage's metadata, put this folder on a SSD if possible -

/var/lib/garage/data/: Folder containing Garage's data, this folder will be your main data storage and must be on a large storage (e.g. large HDD)

A valid /etc/garage/garage.toml for our cluster would look as follows:

metadata_dir = "/var/lib/garage/meta"

data_dir = "/var/lib/garage/data"

replication_mode = "3"

compression_level = 2

rpc_bind_addr = "[::]:3901"

rpc_public_addr = "<this node's public IP>:3901"

rpc_secret = "<RPC secret>"

bootstrap_peers = []

[s3_api]

s3_region = "garage"

api_bind_addr = "[::]:3900"

root_domain = ".s3.garage"

[s3_web]

bind_addr = "[::]:3902"

root_domain = ".web.garage"

index = "index.html"

Check the following for your configuration files:

-

Make sure

rpc_public_addrcontains the public IP address of the node you are configuring. This parameter is optional but recommended: if your nodes have trouble communicating with one another, consider adding it. -

Make sure

rpc_secretis the same value on all nodes. It should be a 32-bytes hex-encoded secret key. You can generate such a key withopenssl rand -hex 32.

Starting Garage using Docker

On each machine, you can run the daemon with:

docker run \

-d \

--name garaged \

--restart always \

--network host \

-v /etc/garage.toml:/etc/garage.toml \

-v /var/lib/garage/meta:/var/lib/garage/meta \

-v /var/lib/garage/data:/var/lib/garage/data \

lxpz/garage_amd64:v0.4.0

It should be restarted automatically at each reboot. Please note that we use host networking as otherwise Docker containers can not communicate with IPv6.

Upgrading between Garage versions should be supported transparently, but please check the relase notes before doing so! To upgrade, simply stop and remove this container and start again the command with a new version of Garage.

Controling the daemon

The garage binary has two purposes:

- it acts as a daemon when launched with

garage server - it acts as a control tool for the daemon when launched with any other command

Ensure an appropriate garage binary (the same version as your Docker image) is available in your path.

If your configuration file is at /etc/garage.toml, the garage binary should work with no further change.

You can test your garage CLI utility by running a simple command such as:

garage status

At this point, nodes are not yet talking to one another. Your output should therefore look like follows:

Mercury$ garage status

==== HEALTHY NODES ====

ID Hostname Address Tag Zone Capacity

563e1ac825ee3323… Mercury [fc00:1::1]:3901 NO ROLE ASSIGNED

Connecting nodes together

When your Garage nodes first start, they will generate a local node identifier (based on a public/private key pair).

To obtain the node identifier of a node, once it is generated,

run garage node id.

This will print keys as follows:

Mercury$ garage node id

563e1ac825ee3323aa441e72c26d1030d6d4414aeb3dd25287c531e7fc2bc95d@[fc00:1::1]:3901

Venus$ garage node id

86f0f26ae4afbd59aaf9cfb059eefac844951efd5b8caeec0d53f4ed6c85f332@[fc00:1::2]:3901

etc.

You can then instruct nodes to connect to one another as follows:

# Instruct Venus to connect to Mercury (this will establish communication both ways)

Venus$ garage node connect 563e1ac825ee3323aa441e72c26d1030d6d4414aeb3dd25287c531e7fc2bc95d@[fc00:1::1]:3901

You don't nead to instruct all node to connect to all other nodes: nodes will discover one another transitively.

Now if your run garage status on any node, you should have an output that looks as follows:

==== HEALTHY NODES ====

ID Hostname Address Tag Zone Capacity

563e1ac825ee3323… Mercury [fc00:1::1]:3901 NO ROLE ASSIGNED

86f0f26ae4afbd59… Venus [fc00:1::2]:3901 NO ROLE ASSIGNED

68143d720f20c89d… Earth [fc00:B::1]:3901 NO ROLE ASSIGNED

212f7572f0c89da9… Mars [fc00:F::1]:3901 NO ROLE ASSIGNED

Creating a cluster layout

We will now inform Garage of the disk space available on each node of the cluster as well as the zone (e.g. datacenter) in which each machine is located. This information is called the cluster layout and consists of a role that is assigned to each active cluster node.

For our example, we will suppose we have the following infrastructure (Capacity, Identifier and Zone are specific values to Garage described in the following):

| Location | Name | Disk Space | Capacity | Identifier | Zone |

|---|---|---|---|---|---|

| Paris | Mercury | 1 To | 10 | 563e | par1 |

| Paris | Venus | 2 To | 20 | 86f0 | par1 |

| London | Earth | 2 To | 20 | 6814 | lon1 |

| Brussels | Mars | 1.5 To | 15 | 212f | bru1 |

Node identifiers

After its first launch, Garage generates a random and unique identifier for each nodes, such as:

563e1ac825ee3323aa441e72c26d1030d6d4414aeb3dd25287c531e7fc2bc95d

Often a shorter form can be used, containing only the beginning of the identifier, like 563e,

which identifies the server "Mercury" located in "Paris" according to our previous table.

The most simple way to match an identifier to a node is to run:

garage status

It will display the IP address associated with each node; from the IP address you will be able to recognize the node.

Zones

Zones are simply a user-chosen identifier that identify a group of server that are grouped together logically. It is up to the system administrator deploying Garage to identify what does "grouped together" means.

In most cases, a zone will correspond to a geographical location (i.e. a datacenter). Behind the scene, Garage will use zone definition to try to store the same data on different zones, in order to provide high availability despite failure of a zone.

Capacity

Garage reasons on an abstract metric about disk storage that is named the capacity of a node. The capacity configured in Garage must be proportional to the disk space dedicated to the node.

Capacity values must be integers but can be given any signification. Here we chose that 1 unit of capacity = 100 GB.

Note that the amount of data stored by Garage on each server may not be strictly proportional to its capacity value, as Garage will priorize having 3 copies of data in different zones, even if this means that capacities will not be strictly respected. For example in our above examples, nodes Earth and Mars will always store a copy of everything each, and the third copy will have 66% chance of being stored by Venus and 33% chance of being stored by Mercury.

Injecting the topology

Given the information above, we will configure our cluster as follow:

garage layout assign -z par1 -c 10 -t mercury 563e

garage layout assign -z par1 -c 20 -t venus 86f0

garage layout assign -z lon1 -c 20 -t earth 6814

garage layout assign -z bru1 -c 15 -t mars 212f

At this point, the changes in the cluster layout have not yet been applied. To show the new layout that will be applied, call:

garage layout show

Once you are satisfied with your new layout, apply it with:

garage layout apply

WARNING: if you want to use the layout modification commands in a script, make sure to read this page first.

Using your Garage cluster

Creating buckets and managing keys is done using the garage CLI,

and is covered in the quick start guide.

Remember also that the CLI is self-documented thanks to the --help flag and

the help subcommand (e.g. garage help, garage key --help).

Configuring S3-compatible applicatiosn to interact with Garage is covered in the Integrations section.

Compiling Garage from source

Garage is a standard Rust project.

First, you need rust and cargo.

For instance on Debian:

sudo apt-get update

sudo apt-get install -y rustc cargo

You can also use Rustup to setup a Rust toolchain easily.

Using source from crates.io

Garage's source code is published on crates.io, Rust's official package repository.

This means you can simply ask cargo to download and build this source code for you:

cargo install garage

That's all, garage should be in $HOME/.cargo/bin.

You can add this folder to your $PATH or copy the binary somewhere else on your system.

For instance:

sudo cp $HOME/.cargo/bin/garage /usr/local/bin/garage

Using source from the Gitea repository

The primary location for Garage's source code is the Gitea repository.

Clone the repository and build Garage with the following commands:

git clone https://git.deuxfleurs.fr/Deuxfleurs/garage.git

cd garage

cargo build

Be careful, as this will make a debug build of Garage, which will be extremely slow!

To make a release build, invoke cargo build --release (this takes much longer).

The binaries built this way are found in target/{debug,release}/garage.

Starting Garage with systemd

We make some assumptions for this systemd deployment.

-

Your garage binary is located at

/usr/local/bin/garage. -

Your configuration file is located at

/etc/garage.toml. -

Your

garage.tomlmust be set withmetadata_dir=/var/lib/garage/metaanddata_dir=/var/lib/garage/data. This is mandatory to usesystemdhardening feature Dynamic User. Note that in your host filesystem, Garage data will be held in/var/lib/private/garage.

Create a file named /etc/systemd/system/garage.service:

[Unit]

Description=Garage Data Store

After=network-online.target

Wants=network-online.target

[Service]

Environment='RUST_LOG=garage=info' 'RUST_BACKTRACE=1'

ExecStart=/usr/local/bin/garage server

StateDirectory=garage

DynamicUser=true

ProtectHome=true

NoNewPrivileges=true

[Install]

WantedBy=multi-user.target

A note on hardening: garage will be run as a non privileged user, its user id is dynamically allocated by systemd. It cannot access (read or write) home folders (/home, /root and /run/user), the rest of the filesystem can only be read but not written, only the path seen as /var/lib/garage is writable as seen by the service (mapped to /var/lib/private/garage on your host). Additionnaly, the process can not gain new privileges over time.

To start the service then automatically enable it at boot:

sudo systemctl start garage

sudo systemctl enable garage

To see if the service is running and to browse its logs:

sudo systemctl status garage

sudo journalctl -u garage

If you want to modify the service file, do not forget to run systemctl daemon-reload

to inform systemd of your modifications.

Gateways

Gateways allow you to expose Garage endpoints (S3 API and websites) without storing data on the node.

Benefits

You can configure Garage as a gateway on all nodes that will consume your S3 API, it will provide you the following benefits:

-

It removes 1 or 2 network RTT. Instead of (querying your reverse proxy then) querying a random node of the cluster that will forward your request to the nodes effectively storing the data, your local gateway will directly knows which node to query.

-

It eases server management. Instead of tracking in your reverse proxy and DNS what are the current Garage nodes, your gateway being part of the cluster keeps this information for you. In your software, you will always specify

http://localhost:3900. -

It simplifies security. Instead of having to maintain and renew a TLS certificate, you leverage the Secret Handshake protocol we use for our cluster. The S3 API protocol will be in plain text but limited to your local machine.

Limitations

Currently it will not work with minio client. Follow issue #64 for more information.

Spawn a Gateway

The instructions are similar to a regular node, the only option that is different is while configuring the node, you must set the --gateway parameter:

garage layout assign --gateway --tag gw1 <node_id>

garage layout show # review the changes you are making

garage layout apply # once satisfied, apply the changes

Then use http://localhost:3900 when a S3 endpoint is required:

aws --endpoint-url http://127.0.0.1:3900 s3 ls

If a newly added gateway node seems to not be working, do a full table resync to ensure that bucket and key list are correctly propagated:

garage repair -a --yes tables

Exposing buckets as websites

You can expose your bucket as a website with this simple command:

garage bucket website --allow my-website

Now it will be publicly exposed on the web endpoint (by default listening on port 3902).

Our website serving logic is as follow:

- Supports only static websites (no support for PHP or other languages)

- Does not support directory listing

- The index is defined in your

garage.toml. (ref)

Now we need to infer the URL of your website through your bucket name. Let assume:

- we set

root_domain = ".web.example.com"ingarage.toml(ref) - our bucket name is

garagehq.deuxfleurs.fr.

Our bucket will be served if the Host field matches one of these 2 values (the port is ignored):

-

garagehq.deuxfleurs.fr.web.example.com: you can dedicate a subdomain to your users (hereweb.example.com). -

garagehq.deuxfleurs.fr: your users can bring their own domain name, they just need to point them to your Garage cluster.

You can try this logic locally, without configuring any DNS, thanks to curl:

# prepare your test

echo hello world > /tmp/index.html

mc cp /tmp/index.html garage/garagehq.deuxfleurs.fr

curl -H 'Host: garagehq.deuxfleurs.fr' http://localhost:3902

# should print "hello world"

curl -H 'Host: garagehq.deuxfleurs.fr.web.example.com' http://localhost:3902

# should also print "hello world"

Now that you understand how website logic works on Garage, you can:

- make the website endpoint listens on port 80 (instead of 3902)

- use iptables to redirect the port 80 to the port 3902:

iptables -t nat -A PREROUTING -p tcp -dport 80 -j REDIRECT -to-port 3902 - or configure a reverse proxy in front of Garage to add TLS (HTTPS), CORS support, etc.

You can also take a look at Website Integration to see how you can add Garage to your workflow.

Configuring a reverse proxy

The main reason to add a reverse proxy in front of Garage is to provide TLS to your users.

In production you will likely need your certificates signed by a certificate authority. The most automated way is to use a provider supporting the ACME protocol such as Let's Encrypt, ZeroSSL or Buypass Go SSL.

If you are only testing Garage, you can generate a self-signed certificate to follow the documentation:

openssl req \

-new \

-x509 \

-keyout /tmp/garage.key \

-out /tmp/garage.crt \

-nodes \

-subj "/C=XX/ST=XX/L=XX/O=XX/OU=XX/CN=localhost/emailAddress=X@X.XX" \

-addext "subjectAltName = DNS:localhost, IP:127.0.0.1"

cat /tmp/garage.key /tmp/garage.crt > /tmp/garage.pem

Be careful as you will need to allow self signed certificates in your client.

For example, with minio, you must add the --insecure flag.

An example:

mc ls --insecure garage/

socat (only for testing purposes)

If you want to test Garage with a TLS frontend, socat can do it for you in a single command:

socat \

"openssl-listen:443,\

reuseaddr,\

fork,\

verify=0,\

cert=/tmp/garage.pem" \

tcp4-connect:localhost:3900

Nginx

Nginx is a well-known reverse proxy suitable for production. We do the configuration in 3 steps: first we define the upstream blocks ("the backends") then we define the server blocks ("the frontends") for the S3 endpoint and finally for the web endpoint.

The following configuration blocks can be all put in the same /etc/nginx/sites-available/garage.conf.

To make your configuration active, run ln -s /etc/nginx/sites-available/garage.conf /etc/nginx/sites-enabled/.

If you directly put the instructions in the root nginx.conf, keep in mind that these configurations must be enclosed inside a http { } block.

And do not forget to reload nginx with systemctl reload nginx or nginx -s reload.

Defining backends

First, we need to tell to nginx how to access our Garage cluster. Because we have multiple nodes, we want to leverage all of them by spreading the load.

In nginx, we can do that with the upstream directive.

Because we have 2 endpoints: one for the S3 API and one to serve websites,

we create 2 backends named respectively s3_backend and web_backend.

A documented example for the s3_backend assuming you chose port 3900:

upstream s3_backend {

# if you have a garage instance locally

server 127.0.0.1:3900;

# you can also put your other instances

server 192.168.1.3:3900;

# domain names also work

server garage1.example.com:3900;

# you can assign weights if you have some servers

# that are more powerful than others

server garage2.example.com:3900 weight=2;

}

A similar example for the web_backend assuming you chose port 3902:

upstream web_backend {

server 127.0.0.1:3902;

server 192.168.1.3:3902;

server garage1.example.com:3902;

server garage2.example.com:3902 weight=2;

}

Exposing the S3 API

The configuration section for the S3 API is simple as we only support path-access style yet. We simply configure the TLS parameters and forward all the requests to the backend:

server {

listen [::]:443 http2 ssl;

ssl_certificate /tmp/garage.crt;

ssl_certificate_key /tmp/garage.key;

# should be the endpoint you want

# aws uses s3.amazonaws.com for example

server_name garage.example.com;

location / {

proxy_pass http://s3_backend;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $host;

}

}

Exposing the web endpoint

The web endpoint is a bit more complicated to configure as it listens on many different Host fields.

To better understand the logic involved, you can refer to the Exposing buckets as websites section.

Also, for some applications, you may need to serve CORS headers: Garage can not serve them directly but we show how we can use nginx to serve them.

You can use the following example as your starting point:

server {

listen [::]:443 http2 ssl;

ssl_certificate /tmp/garage.crt;

ssl_certificate_key /tmp/garage.key;

# We list all the Hosts fields that can access our buckets

server_name *.web.garage

example.com

my-site.tld

;

location / {

# Add these headers only if you want to allow CORS requests

# For production use, more specific rules would be better for your security

add_header Access-Control-Allow-Origin *;

add_header Access-Control-Max-Age 3600;

add_header Access-Control-Expose-Headers Content-Length;

add_header Access-Control-Allow-Headers Range;

# We do not forward OPTIONS requests to Garage

# as it does not support them but they are needed for CORS.

if ($request_method = OPTIONS) {

return 200;

}

proxy_pass http://web_backend;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $host;

}

}

Apache httpd

@TODO

Traefik

@TODO

Recovering from failures

Garage is meant to work on old, second-hand hardware. In particular, this makes it likely that some of your drives will fail, and some manual intervention will be needed. Fear not! For Garage is fully equipped to handle drive failures, in most common cases.

A note on availability of Garage

With nodes dispersed in 3 zones or more, here are the guarantees Garage provides with the 3-way replication strategy (3 copies of all data, which is the recommended replication mode):

- The cluster remains fully functional as long as the machines that fail are in only one zone. This includes a whole zone going down due to power/Internet outage.

- No data is lost as long as the machines that fail are in at most two zones.

Of course this only works if your Garage nodes are correctly configured to be aware of the zone in which they are located.

Make sure this is the case using garage status to check on the state of your cluster's configuration.

In case of temporarily disconnected nodes, Garage should automatically re-synchronize when the nodes come back up. This guide will deal with recovering from disk failures that caused the loss of the data of a node.

First option: removing a node

If you don't have spare parts (HDD, SDD) to replace the failed component, and if there are enough remaining nodes in your cluster (at least 3), you can simply remove the failed node from Garage's configuration. Note that if you do intend to replace the failed parts by new ones, using this method followed by adding back the node is not recommended (although it should work), and you should instead use one of the methods detailed in the next sections.

Removing a node is done with the following command:

garage layout remove <node_id>

garage layout show # review the changes you are making

garage layout apply # once satisfied, apply the changes

(you can get the node_id of the failed node by running garage status)

This will repartition the data and ensure that 3 copies of everything are present on the nodes that remain available.

Replacement scenario 1: only data is lost, metadata is fine

The recommended deployment for Garage uses an SSD to store metadata, and an HDD to store blocks of data. In the case where only a single HDD crashes, the blocks of data are lost but the metadata is still fine.

This is very easy to recover by setting up a new HDD to replace the failed one. The node does not need to be fully replaced and the configuration doesn't need to change. We just need to tell Garage to get back all the data blocks and store them on the new HDD.

First, set up a new HDD to store Garage's data directory on the failed node, and restart Garage using the existing configuration. Then, run:

garage repair -a --yes blocks

This will re-synchronize blocks of data that are missing to the new HDD, reading them from copies located on other nodes.

You can check on the advancement of this process by doing the following command:

garage stats -a

Look out for the following output:

Block manager stats:

resync queue length: 26541

This indicates that one of the Garage node is in the process of retrieving missing data from other nodes. This number decreases to zero when the node is fully synchronized.

Replacement scenario 2: metadata (and possibly data) is lost

This scenario covers the case where a full node fails, i.e. both the metadata directory and the data directory are lost, as well as the case where only the metadata directory is lost.

To replace the lost node, we will start from an empty metadata directory, which means Garage will generate a new node ID for the replacement node. We will thus need to remove the previous node ID from Garage's configuration and replace it by the ID of the new node.

If your data directory is stored on a separate drive and is still fine, you can keep it, but it is not necessary to do so. In all cases, the data will be rebalanced and the replacement node will not store the same pieces of data as were originally stored on the one that failed. So if you keep the data files, the rebalancing might be faster but most of the pieces will be deleted anyway from the disk and replaced by other ones.

First, set up a new drive to store the metadata directory for the replacement node (a SSD is recommended),

and for the data directory if necessary. You can then start Garage on the new node.

The restarted node should generate a new node ID, and it should be shown as NOT CONFIGURED in garage status.

The ID of the lost node should be shown in garage status in the section for disconnected/unavailable nodes.

Then, replace the broken node by the new one, using:

garage layout assign <new_node_id> --replace <old_node_id> \

-c <capacity> -z <zone> -t <node_tag>

garage layout show # review the changes you are making

garage layout apply # once satisfied, apply the changes

Garage will then start synchronizing all required data on the new node.

This process can be monitored using the garage stats -a command.

Integrations

Garage implements the Amazon S3 protocol, which makes it compatible with many existing software programs.

In particular, you will find here instructions to connect it with:

Generic instructions

To configure S3-compatible software to interact with Garage, you will need the following parameters:

-

An API endpoint: this corresponds to the HTTP or HTTPS address used to contact the Garage server. When runing Garage locally this will usually be

http://127.0.0.1:3900. In a real-world setting, you would usually have a reverse-proxy that adds TLS support and makes your Garage server available under a public hostname such ashttps://garage.example.com. -

An API access key and its associated secret key. These usually look something like this:

GK3515373e4c851ebaad366558(access key),7d37d093435a41f2aab8f13c19ba067d9776c90215f56614adad6ece597dbb34(secret key). These keys are created and managed using thegarageCLI, as explained in the quick start guide.

Most S3 clients can be configured easily with these parameters, provided that you follow the following guidelines:

-

Force path style: Garage does not support DNS-style buckets, which are now by default on Amazon S3. Instead, Garage uses the legacy path-style bucket addressing. Remember to configure your client to acknowledge this fact.

-

Configuring the S3 region: Garage requires your client to talk to the correct "S3 region", which is set in the configuration file. This is often set just to

garage. If this is not configured explicitly, clients usually try to talk to regionus-east-1. Garage should normally redirect your client to the correct region, but in case your client does not support this you might have to configure it manually.

Apps (Nextcloud, Peertube...)

In this section, we cover the following software: Nextcloud, Peertube, Mastodon, Matrix

Nextcloud

Nextcloud is a popular file synchronisation and backup service. By default, Nextcloud stores its data on the local filesystem. If you want to expand your storage to aggregate multiple servers, Garage is the way to go.

A S3 backend can be configured in two ways on Nextcloud, either as Primary Storage or as an External Storage. Primary storage will store all your data on S3, in an opaque manner, and will provide the best performances. External storage enable you to select which data will be stored on S3, your file hierarchy will be preserved in S3, but it might be slower.

In the following, we cover both methods but before reading our guide, we suppose you have done some preliminary steps. First, we expect you have an already installed and configured Nextcloud instance. Second, we suppose you have created a key and a bucket.

As a reminder, you can create a key for your nextcloud instance as follow:

garage key new --name nextcloud-key

Keep the Key ID and the Secret key in a pad, they will be needed later.

Then you can create a bucket and give read/write rights to your key on this bucket with:

garage bucket create nextcloud

garage bucket allow nextcloud --read --write --key nextcloud-key

Primary Storage

Now edit your Nextcloud configuration file to enable object storage.

On my installation, the config. file is located at the following path: /var/www/nextcloud/config/config.php.

We will add a new root key to the $CONFIG dictionnary named objectstore:

<?php

$CONFIG = array(

/* your existing configuration */

'objectstore' => [

'class' => '\\OC\\Files\\ObjectStore\\S3',

'arguments' => [

'bucket' => 'nextcloud', // Your bucket name, must be created before

'autocreate' => false, // Garage does not support autocreate

'key' => 'xxxxxxxxx', // The Key ID generated previously

'secret' => 'xxxxxxxxx', // The Secret key generated previously

'hostname' => '127.0.0.1', // Can also be a domain name, eg. garage.example.com

'port' => 3900, // Put your reverse proxy port or your S3 API port

'use_ssl' => false, // Set it to true if you have a TLS enabled reverse proxy

'region' => 'garage', // Garage has only one region named "garage"

'use_path_style' => true // Garage supports only path style, must be set to true

],

],

That's all, your Nextcloud will store all your data to S3. To test your new configuration, just reload your Nextcloud webpage and start sending data.

External link: Nextcloud Documentation > Primary Storage

External Storage

From the GUI. Activate the "External storage support" app from the "Applications" page (click on your account icon on the top right corner of your screen to display the menu). Go to your parameters page (also located below your account icon). Click on external storage (or the corresponding translation in your language).

Add a new external storage. Put what you want in "folder name" (eg. "shared"). Select "Amazon S3". Keep "Access Key" for the Authentication field. In Configuration, put your bucket name (eg. nextcloud), the host (eg. 127.0.0.1), the port (eg. 3900 or 443), the region (garage). Tick the SSL box if you have put an HTTPS proxy in front of garage. You must tick the "Path access" box and you must leave the "Legacy authentication (v2)" box empty. Put your Key ID (eg. GK...) and your Secret Key in the last two input boxes. Finally click on the tick symbol on the right of your screen.

Now go to your "Files" app and a new "linked folder" has appeared with the name you chose earlier (eg. "shared").

External link: Nextcloud Documentation > External Storage Configuration GUI

From the CLI. First install the external storage application:

php occ app:install files_external

Then add a new mount point with:

php occ files_external:create \

-c bucket=nextcloud \

-c hostname=127.0.0.1 \

-c port=3900 \

-c region=garage \

-c use_ssl=false \

-c use_path_style=true \

-c legacy_auth=false \

-c key=GKxxxx \

-c secret=xxxx \

shared amazons3 amazons3::accesskey

Adapt the hostname, port, use_ssl, key, and secret entries to your configuration.

Do not change the use_path_style and legacy_auth entries, other configurations are not supported.

External link: Nextcloud Documentation > occ command > files external

Peertube

Peertube proposes a clever integration of S3 by directly exposing its endpoint instead of proxifying requests through the application. In other words, Peertube is only responsible of the "control plane" and offload the "data plane" to Garage. In return, this system is a bit harder to configure, especially with Garage that supports less feature than other older S3 backends. We show that it is still possible to configure Garage with Peertube, allowing you to spread the load and the bandwidth usage on the Garage cluster.

Enable path-style access by patching Peertube

First, you will need to apply a small patch on Peertube (#4510):

From e3b4c641bdf67e07d406a1d49d6aa6b1fbce2ab4 Mon Sep 17 00:00:00 2001

From: Martin Honermeyer <maze@strahlungsfrei.de>

Date: Sun, 31 Oct 2021 12:34:04 +0100

Subject: [PATCH] Allow setting path-style access for object storage

---

config/default.yaml | 4 ++++

config/production.yaml.example | 4 ++++

server/initializers/config.ts | 1 +

server/lib/object-storage/shared/client.ts | 3 ++-

.../production/config/custom-environment-variables.yaml | 2 ++

5 files changed, 13 insertions(+), 1 deletion(-)

diff --git a/config/default.yaml b/config/default.yaml

index cf9d69a6211..4efd56fb804 100644

--- a/config/default.yaml

+++ b/config/default.yaml

@@ -123,6 +123,10 @@ object_storage:

# You can also use AWS_SECRET_ACCESS_KEY env variable

secret_access_key: ''

+ # Reference buckets via path rather than subdomain

+ # (i.e. "my-endpoint.com/bucket" instead of "bucket.my-endpoint.com")

+ force_path_style: false

+

# Maximum amount to upload in one request to object storage

max_upload_part: 2GB

diff --git a/config/production.yaml.example b/config/production.yaml.example

index 70993bf57a3..9ca2de5f4c9 100644

--- a/config/production.yaml.example

+++ b/config/production.yaml.example

@@ -121,6 +121,10 @@ object_storage:

# You can also use AWS_SECRET_ACCESS_KEY env variable

secret_access_key: ''

+ # Reference buckets via path rather than subdomain

+ # (i.e. "my-endpoint.com/bucket" instead of "bucket.my-endpoint.com")

+ force_path_style: false

+

# Maximum amount to upload in one request to object storage

max_upload_part: 2GB

diff --git a/server/initializers/config.ts b/server/initializers/config.ts

index 8375bf4304c..d726c59a4b6 100644

--- a/server/initializers/config.ts

+++ b/server/initializers/config.ts

@@ -91,6 +91,7 @@ const CONFIG = {

ACCESS_KEY_ID: config.get<string>('object_storage.credentials.access_key_id'),

SECRET_ACCESS_KEY: config.get<string>('object_storage.credentials.secret_access_key')

},

+ FORCE_PATH_STYLE: config.get<boolean>('object_storage.force_path_style'),

VIDEOS: {

BUCKET_NAME: config.get<string>('object_storage.videos.bucket_name'),

PREFIX: config.get<string>('object_storage.videos.prefix'),

diff --git a/server/lib/object-storage/shared/client.ts b/server/lib/object-storage/shared/client.ts

index c9a61459336..eadad02f93f 100644

--- a/server/lib/object-storage/shared/client.ts

+++ b/server/lib/object-storage/shared/client.ts

@@ -26,7 +26,8 @@ function getClient () {

accessKeyId: OBJECT_STORAGE.CREDENTIALS.ACCESS_KEY_ID,

secretAccessKey: OBJECT_STORAGE.CREDENTIALS.SECRET_ACCESS_KEY

}

- : undefined

+ : undefined,

+ forcePathStyle: CONFIG.OBJECT_STORAGE.FORCE_PATH_STYLE

})

logger.info('Initialized S3 client %s with region %s.', getEndpoint(), OBJECT_STORAGE.REGION, lTags())

diff --git a/support/docker/production/config/custom-environment-variables.yaml b/support/docker/production/config/custom-environment-variables.yaml

index c7cd28e6521..a960bab0bc9 100644

--- a/support/docker/production/config/custom-environment-variables.yaml

+++ b/support/docker/production/config/custom-environment-variables.yaml

@@ -54,6 +54,8 @@ object_storage:

region: "PEERTUBE_OBJECT_STORAGE_REGION"

+ force_path_style: "PEERTUBE_OBJECT_STORAGE_FORCE_PATH_STYLE"

+

max_upload_part:

__name: "PEERTUBE_OBJECT_STORAGE_MAX_UPLOAD_PART"

__format: "json"

You can then recompile it with:

npm run build

And it can be started with:

NODE_ENV=production NODE_CONFIG_DIR=/srv/peertube/config node dist/server.js

Create resources in Garage

Create a key for Peertube:

garage key new --name peertube-key

Keep the Key ID and the Secret key in a pad, they will be needed later.

We need two buckets, one for normal videos (named peertube-video) and one for webtorrent videos (named peertube-playlist).

garage bucket create peertube-video

garage bucket create peertube-playlist

Now we allow our key to read and write on these buckets:

garage bucket allow peertube-playlist --read --write --key peertube-key

garage bucket allow peertube-video --read --write --key peertube-key

Finally, we need to expose these buckets publicly to serve their content to users:

garage bucket website --allow peertube-playlist

garage bucket website --allow peertube-video

These buckets are now accessible on the web port (by default 3902) with the following URL: http://<bucket><root_domain>:<web_port> where the root domain is defined in your configuration file (by default .web.garage). So we have currently the following URLs:

- http://peertube-playlist.web.garage:3902

- http://peertube-video.web.garage:3902

Make sure you (will) have a corresponding DNS entry for them.

Configure a Reverse Proxy to serve CORS

Now we will configure a reverse proxy in front of Garage. This is required as we have no other way to serve CORS headers yet. Check the Configuring a reverse proxy section to know how.

Now make sure that your 2 dns entries are pointing to your reverse proxy.

Configure Peertube

You must edit the file named config/production.yaml, we are only modifying the root key named object_storage:

object_storage:

enabled: true

# Put localhost only if you have a garage instance running on that node

endpoint: 'http://localhost:3900' # or "garage.example.com" if you have TLS on port 443

# This entry has been added by our patch, must be set to true

force_path_style: true

# Garage supports only one region for now, named garage

region: 'garage'

credentials:

access_key_id: 'GKxxxx'

secret_access_key: 'xxxx'

max_upload_part: 2GB

streaming_playlists:

bucket_name: 'peertube-playlist'

# Keep it empty for our example

prefix: ''

# You must fill this field to make Peertube use our reverse proxy/website logic

base_url: 'http://peertube-playlist.web.garage' # Example: 'https://mirror.example.com'

# Same settings but for webtorrent videos

videos:

bucket_name: 'peertube-video'

prefix: ''

# You must fill this field to make Peertube use our reverse proxy/website logic

base_url: 'http://peertube-video.web.garage'

That's all

Everything must be configured now, simply restart Peertube and try to upload a video. You must see in your browser console that data are fetched directly from our bucket (through the reverse proxy).

Miscellaneous

Known bug: The playback does not start and some 400 Bad Request Errors appear in your browser console and on Garage. If the description of the error contains HTTP Invalid Range: InvalidRange, the error is due to a buggy ffmpeg version. You must avoid the 4.4.0 and use either a newer or older version.

Associated issues: #137, #138, #140. These issues are non blocking.

External link: Peertube Documentation > Remote Storage

Mastodon

https://docs.joinmastodon.org/admin/config/#cdn

Matrix

Matrix is a chat communication protocol. Its main stable server implementation, Synapse, provides a module to store media on a S3 backend. Additionally, a server independent media store supporting S3 has been developped by the community, it has been made possible thanks to how the matrix API has been designed and will work with implementations like Conduit, Dendrite, etc.

synapse-s3-storage-provider (synapse only)

Supposing you have a working synapse installation, you can add the module with pip:

pip3 install --user git+https://github.com/matrix-org/synapse-s3-storage-provider.git

Now create a bucket and a key for your matrix instance (note your Key ID and Secret Key somewhere, they will be needed later):

garage key new --name matrix-key

garage bucket create matrix

garage bucket allow matrix --read --write --key matrix-key

Then you must edit your server configuration (eg. /etc/matrix-synapse/homeserver.yaml) and add the media_storage_providers root key:

media_storage_providers:

- module: s3_storage_provider.S3StorageProviderBackend

store_local: True # do we want to store on S3 media created by our users?

store_remote: True # do we want to store on S3 media created

# by users of others servers federated to ours?

store_synchronous: True # do we want to wait that the file has been written before returning?

config:

bucket: matrix # the name of our bucket, we chose matrix earlier

region_name: garage # only "garage" is supported for the region field

endpoint_url: http://localhost:3900 # the path to the S3 endpoint

access_key_id: "GKxxx" # your Key ID

secret_access_key: "xxxx" # your Secret Key

Note that uploaded media will also be stored locally and this behavior can not be deactivated, it is even required for some operations like resizing images. In fact, your local filesysem is considered as a cache but without any automated way to garbage collect it.

We can build our garbage collector with s3_media_upload, a tool provided with the module.

If you installed the module with the command provided before, you should be able to bring it in your path:

PATH=$HOME/.local/bin/:$PATH

command -v s3_media_upload

Now we can write a simple script (eg ~/.local/bin/matrix-cache-gc):

#!/bin/bash

## CONFIGURATION ##

AWS_ACCESS_KEY_ID=GKxxx

AWS_SECRET_ACCESS_KEY=xxxx

S3_ENDPOINT=http://localhost:3900

S3_BUCKET=matrix

MEDIA_STORE=/var/lib/matrix-synapse/media

PG_USER=matrix

PG_PASS=xxxx

PG_DB=synapse

PG_HOST=localhost

PG_PORT=5432

## CODE ##

PATH=$HOME/.local/bin/:$PATH

cat > database.yaml <<EOF

user: $PG_USER

password: $PG_PASS

database: $PG_DB

host: $PG_HOST

port: $PG_PORT

EOF

s3_media_upload update-db 1d

s3_media_upload --no-progress check-deleted $MEDIA_STORE

s3_media_upload --no-progress upload $MEDIA_STORE $S3_BUCKET --delete --endpoint-url $S3_ENDPOINT

This script will list all the medias that were not accessed in the 24 hours according to your database. It will check if, in this list, the file still exists in the local media store. For files that are still in the cache, it will upload them to S3 if they are not already present (in case of a crash or an initial synchronisation). Finally, the script will delete these files from the cache.

Make this script executable and check that it works:

chmod +x $HOME/.local/bin/matrix-cache-gc

matrix-cache-gc

Add it to your crontab. Open the editor with:

crontab -e

And add a new line. For example, to run it every 10 minutes:

*/10 * * * * $HOME/.local/bin/matrix-cache-gc

External link: Github > matrix-org/synapse-s3-storage-provider

matrix-media-repo (server independent)

External link: matrix-media-repo Documentation > S3

Pixelfed

https://docs.pixelfed.org/technical-documentation/env.html#filesystem

Pleroma

https://docs-develop.pleroma.social/backend/configuration/cheatsheet/#pleromauploaderss3

Lemmy

via pict-rs https://git.asonix.dog/asonix/pict-rs/commit/f9f4fc63d670f357c93f24147c2ee3e1278e2d97

Funkwhale

https://docs.funkwhale.audio/admin/configuration.html#s3-storage

Misskey

https://github.com/misskey-dev/misskey/commit/9d944243a3a59e8880a360cbfe30fd5a3ec8d52d

Prismo

https://gitlab.com/prismosuite/prismo/-/blob/dev/.env.production.sample#L26-33

Owncloud Infinite Scale (ocis)

Unsupported

- Mobilizon: No S3 integration

- WriteFreely: No S3 integration

- Plume: No S3 integration

Websites (Hugo, Jekyll, Publii...)

Garage is also suitable to host static websites. While they can be deployed with traditional CLI tools, some static website generators have integrated options to ease your workflow.

Hugo

Add to your config.toml the following section:

[[deployment.targets]]

URL = "s3://<bucket>?endpoint=<endpoint>&disableSSL=<bool>&s3ForcePathStyle=true®ion=garage"

For example:

[[deployment.targets]]

URL = "s3://my-blog?endpoint=localhost:9000&disableSSL=true&s3ForcePathStyle=true®ion=garage"

Then inform hugo of your credentials:

export AWS_ACCESS_KEY_ID=GKxxx

export AWS_SECRET_ACCESS_KEY=xxx

And finally build and deploy your website:

hugo

hugo deploy

External links:

Publii

It would require a patch either on Garage or on Publii to make both systems work.

Currently, the proposed workaround is to deploy your website manually:

- On the left menu, click on Server, choose Manual Deployment (the logo looks like a compressed file)

- Set your website URL, keep Output type as "Non-compressed catalog"

- Click on Save changes

- Click on Sync your website (bottom left of the app)

- On the new page, click again on Sync your website

- Click on Get website files

- You need to synchronize the output folder you see in your file explorer, we will use minio client.

Be sure that you configured minio client.

Then copy this output folder

mc mirror --overwrite output garage/my-site

Generic (eg. Jekyll)

Some tools do not support sending to a S3 backend but output a compiled folder on your system. We can then use any CLI tool to upload this content to our S3 target.

First, start by configuring minio client.

Then build your website:

jekyll build

And copy jekyll's output folder on S3:

mc mirror --overwrite _site garage/my-site

Repositories (Docker, Nix, Git...)

Whether you need to store and serve binary packages or source code, you may want to deploy a tool referred as a repository or registry. Garage can also help you serve this content.

Gitea

You can use Garage with Gitea to store your git LFS data, your users' avatar, and their attachements.

You can configure a different target for each data type (check [lfs] and [attachment] sections of the Gitea documentation) and you can provide a default one through the [storage] section.

Let's start by creating a key and a bucket (your key id and secret will be needed later, keep them somewhere):

garage key new --name gitea-key

garage bucket create gitea

garage bucket allow gitea --read --write --key gitea-key

Then you can edit your configuration (by default /etc/gitea/conf/app.ini):

[storage]

STORAGE_TYPE=minio

MINIO_ENDPOINT=localhost:3900

MINIO_ACCESS_KEY_ID=GKxxx

MINIO_SECRET_ACCESS_KEY=xxxx

MINIO_BUCKET=gitea

MINIO_LOCATION=garage

MINIO_USE_SSL=false

You can also pass this configuration through environment variables:

GITEA__storage__STORAGE_TYPE=minio

GITEA__storage__MINIO_ENDPOINT=localhost:3900

GITEA__storage__MINIO_ACCESS_KEY_ID=GKxxx

GITEA__storage__MINIO_SECRET_ACCESS_KEY=xxxx

GITEA__storage__MINIO_BUCKET=gitea

GITEA__storage__MINIO_LOCATION=garage

GITEA__storage__MINIO_USE_SSL=false

Then restart your gitea instance and try to upload a custom avatar.

If it worked, you should see some content in your gitea bucket (you must configure your aws command before):

$ aws s3 ls s3://gitea/avatars/

2021-11-10 12:35:47 190034 616ba79ae2b84f565c33d72c2ec50861

External link: Gitea Documentation > Configuration Cheat Sheet

Gitlab

External link: Gitlab Documentation > Object storage

Private NPM Registry (Verdacio)

External link: Verdaccio Github Repository > aws-storage plugin

Docker

Not yet compatible, follow #103.

External link: Docker Documentation > Registry storage drivers > S3 storage driver

Nix

Nix has no repository in its terminology: instead, it breaks down this concept in 2 parts: binary cache and channel.

A channel is a set of .nix definitions that generate definitions for all the software you want to serve.

Because we do not want all our clients to compile all these derivations by themselves, we can compile them once and then serve them as part of our binary cache.

It is possible to use a binary cache without a channel, you only need to serve your nix definitions through another support, like a git repository.

As a first step, we will need to create a bucket on Garage and enabling website access on it:

garage key new --name nix-key

garage bucket create nix.example.com

garage bucket allow nix.example.com --read --write --key nix-key

garage bucket website nix.example.com --allow

If you need more information about exposing buckets as websites on Garage, check Exposing buckets as websites and Configuring a reverse proxy.

Next, we want to check that our bucket works:

echo nix repo > /tmp/index.html

mc cp /tmp/index.html garage/nix/

rm /tmp/index.html

curl https://nix.example.com

# output: nix repo

Binary cache

To serve binaries as part of your cache, you need to sign them with a key specific to nix. You can generate the keypair as follow:

nix-store --generate-binary-cache-key <name> cache-priv-key.pem cache-pub-key.pem

You can then manually sign the packages of your store with the following command:

nix sign-paths --all -k cache-priv-key.pem

Setting a key in nix.conf will do the signature at build time automatically without additional commands.

Edit the nix.conf of your builder:

secret-key-files = /etc/nix/cache-priv-key.pem

Now that your content is signed, you can copy a derivation to your cache. For example, if you want to copy a specific derivation of your store:

nix copy /nix/store/wadmyilr414n7bimxysbny876i2vlm5r-bash-5.1-p8 --to 's3://nix?endpoint=garage.example.com®ion=garage'

Note that if you have not signed your packages, you can append to the end of your S3 URL &secret-key=/etc/nix/cache-priv-key.pem.

Sometimes you don't want to hardcode this store path in your script.

Let suppose that you are working on a codebase that you build with nix-build, you can then run:

nix copy $(nix-build) --to 's3://nix?endpoint=garage.example.com®ion=garage'

This command works because the only thing that nix-build outputs on stdout is the paths of the built derivations in your nix store.

You can include your derivation dependencies:

nix copy $(nix-store -qR $(nix-build)) --to 's3://nix?endpoint=garage.example.com®ion=garage'

Now, your binary cache stores your derivation and all its dependencies.

Just inform your users that they must update their nix.conf file with the following lines:

substituters = https://cache.nixos.org https://nix.example.com

trusted-public-keys = cache.nixos.org-1:6NCHdD59X431o0gWypbMrAURkbJ16ZPMQFGspcDShjY= nix.example.com:eTGL6kvaQn6cDR/F9lDYUIP9nCVR/kkshYfLDJf1yKs=

You must re-add cache.nixorg.org because redeclaring these keys override the previous configuration instead of extending it.

Now, when your clients will run nix-build or any command that generates a derivation for which a hash is already present

on the binary cache, the client will download the result from the cache instead of compiling it, saving lot of time and CPU!

Channels

Channels additionnaly serve Nix definitions, ie. a .nix file referencing

all the derivations you want to serve.

CLI tools

CLI tools allow you to query the S3 API without too many abstractions. These tools are particularly suitable for debug, backups, website deployments or any scripted task that need to handle data.

Minio client (recommended)

Use the following command to set an "alias", i.e. define a new S3 server to be used by the Minio client:

mc alias set \

garage \

<endpoint> \

<access key> \

<secret key> \

--api S3v4

Remember that mc is sometimes called mcli (such as on Arch Linux), to avoid conflicts

with Midnight Commander.

Some commands:

# list buckets

mc ls garage/

# list objets in a bucket

mc ls garage/my_files

# copy from your filesystem to garage

mc cp /proc/cpuinfo garage/my_files/cpuinfo.txt

# copy from garage to your filesystem

mc cp garage/my_files/cpuinfo.txt /tmp/cpuinfo.txt

# mirror a folder from your filesystem to garage

mc mirror --overwrite ./book garage/garagehq.deuxfleurs.fr

AWS CLI

Create a file named ~/.aws/credentials and put:

[default]

aws_access_key_id=xxxx

aws_secret_access_key=xxxx

Then a file named ~/.aws/config and put:

[default]

region=garage

Now, supposing Garage is listening on http://127.0.0.1:3900, you can list your buckets with:

aws --endpoint-url http://127.0.0.1:3900 s3 ls

Passing the --endpoint-url parameter to each command is annoying but AWS developers do not provide a corresponding configuration entry.

As a workaround, you can redefine the aws command by editing the file ~/.bashrc:

function aws { command aws --endpoint-url http://127.0.0.1:3900 $@ ; }

Do not forget to run source ~/.bashrc or to start a new terminal before running the next commands.

Now you can simply run:

# list buckets

aws s3 ls

# list objects of a bucket

aws s3 ls s3://my_files

# copy from your filesystem to garage

aws s3 cp /proc/cpuinfo s3://my_files/cpuinfo.txt

# copy from garage to your filesystem

aws s3 cp s3/my_files/cpuinfo.txt /tmp/cpuinfo.txt

rclone

rclone can be configured using the interactive assistant invoked using rclone config.

You can also configure rclone by writing directly its configuration file.

Here is a template rclone.ini configuration file (mine is located at ~/.config/rclone/rclone.conf):

[garage]

type = s3

provider = Other

env_auth = false

access_key_id = <access key>

secret_access_key = <secret key>

region = <region>

endpoint = <endpoint>

force_path_style = true

acl = private

bucket_acl = private

Now you can run:

# list buckets

rclone lsd garage:

# list objects of a bucket aggregated in directories

rclone lsd garage:my-bucket

# copy from your filesystem to garage

echo hello world > /tmp/hello.txt

rclone copy /tmp/hello.txt garage:my-bucket/

# copy from garage to your filesystem

rclone copy garage:quentin.divers/hello.txt .

# see all available subcommands

rclone help

Advice with rclone: use the --fast-list option when accessing buckets with large amounts of objects.

This will tremendously accelerate operations such as rclone sync or rclone ncdu by reducing the number

of ListObjects calls that are made.

s3cmd

Here is a template for the s3cmd.cfg file to talk with Garage:

[default]

access_key = <access key>

secret_key = <secret key>

host_base = <endpoint without http(s)://>

host_bucket = <same as host_base>

use_https = <False or True>

And use it as follow:

# List buckets

s3cmd ls

# s3cmd objects inside a bucket

s3cmd ls s3://my-bucket

# copy from your filesystem to garage

echo hello world > /tmp/hello.txt

s3cmd put /tmp/hello.txt s3://my-bucket/

# copy from garage to your filesystem

s3cmd get s3://my-bucket/hello.txt hello.txt

Cyberduck & duck

TODO

Backups (restic, duplicity...)

Backups are essential for disaster recovery but they are not trivial to manage. Using Garage as your backup target will enable you to scale your storage as needed while ensuring high availability.

Borg Backup

Borg Backup is very popular among the backup tools but it is not yet compatible with the S3 API.

We recommend using any other tool listed in this guide because they are all compatible with the S3 API.

If you still want to use Borg, you can use it with rclone mount.

Restic

External links: Restic Documentation > Amazon S3

Duplicity

External links: Duplicity > man (scroll to "URL Format" and "A note on Amazon S3")

Duplicati

External links: Duplicati Documentation > Storage Providers

knoxite

External links: Knoxite Documentation > Storage Backends

kopia

External links: Kopia Documentation > Repositories

Your code (PHP, JS, Go...)

If you are developping a new application, you may want to use Garage to store your user's media.

The S3 API that Garage uses is a standard REST API, so as long as you can make HTTP requests, you can query it. You can check the S3 REST API Reference from Amazon to learn more.

Developping your own wrapper around the REST API is time consuming and complicated. Instead, there are some libraries already avalaible.

Some of them are maintained by Amazon, some by Minio, others by the community.

PHP

- Amazon aws-sdk-php

Javascript

-

Minio SDK

-

Amazon aws-sdk-js

Golang

-

Minio minio-go-sdk

-

Amazon aws-sdk-go-v2

Python

-

Minio SDK

-

Amazon boto3

Java

-

Minio SDK

-

Amazon aws-sdk-java

Rust

- Amazon aws-rust-sdk

.NET

-

Minio SDK

-

Amazon aws-dotnet-sdk

C++

- Amazon aws-cpp-sdk

Haskell

- Minio SDK

FUSE (s3fs, goofys, s3backer...)

WARNING! Garage is not POSIX compatible. Mounting S3 buckets as filesystems will not provide POSIX compatibility. If you are not careful, you will lose or corrupt your data.

Do not use these FUSE filesystems to store any database files (eg. MySQL, Postgresql, Mongo or sqlite), any daemon cache (dovecot, openldap, gitea, etc.), and more generally any software that use locking, advanced filesystems features or make any synchronisation assumption. Ideally, avoid these solutions at all for any serious or production use.

rclone mount

rclone uses the same configuration when used in CLI and mount mode.

We suppose you have the following entry in your rclone.ini (mine is located in ~/.config/rclone/rclone.conf):

[garage]

type = s3

provider = Other

env_auth = false

access_key_id = <access key>

secret_access_key = <secret key>

region = <region>

endpoint = <endpoint>

force_path_style = true

acl = private

bucket_acl = private

Then you can mount and access any bucket as follow:

# mount the bucket

mkdir /tmp/my-bucket

rclone mount --daemon garage:my-bucket /tmp/my-bucket

# set your working directory to the bucket

cd /tmp/my-bucket

# create a file

echo hello world > hello.txt

# access the file

cat hello.txt

# unmount the bucket

cd

fusermount -u /tmp/my-bucket

External link: rclone documentation > rclone mount

s3fs

External link: s3fs github > README.md

goofys

External link: goofys github > README.md

s3backer

External link: s3backer github > manpage

csi-s3

External link: csi-s3 Github > README.md

Reference Manual

A reference manual contains some extensive descriptions about the features and the behaviour of the software. Reading of this chapter is recommended once you have a good knowledge/understanding of Garage. It will be useful if you want to tune it or to use it in some exotic conditions.

Garage configuration file format reference

Here is an example garage.toml configuration file that illustrates all of the possible options:

metadata_dir = "/var/lib/garage/meta"

data_dir = "/var/lib/garage/data"

block_size = 1048576

replication_mode = "3"

compression_level = 1

rpc_secret = "4425f5c26c5e11581d3223904324dcb5b5d5dfb14e5e7f35e38c595424f5f1e6"

rpc_bind_addr = "[::]:3901"

rpc_public_addr = "[fc00:1::1]:3901"

bootstrap_peers = [

"563e1ac825ee3323aa441e72c26d1030d6d4414aeb3dd25287c531e7fc2bc95d@[fc00:1::1]:3901",

"86f0f26ae4afbd59aaf9cfb059eefac844951efd5b8caeec0d53f4ed6c85f332[fc00:1::2]:3901",

"681456ab91350f92242e80a531a3ec9392cb7c974f72640112f90a600d7921a4@[fc00:B::1]:3901",

"212fd62eeaca72c122b45a7f4fa0f55e012aa5e24ac384a72a3016413fa724ff@[fc00:F::1]:3901",

]

consul_host = "consul.service"

consul_service_name = "garage-daemon"

sled_cache_capacity = 134217728

sled_flush_every_ms = 2000

[s3_api]

api_bind_addr = "[::]:3900"

s3_region = "garage"

root_domain = ".s3.garage"

[s3_web]

bind_addr = "[::]:3902"

root_domain = ".web.garage"

index = "index.html"

The following gives details about each available configuration option.

Available configuration options

metadata_dir

The directory in which Garage will store its metadata. This contains the node identifier, the network configuration and the peer list, the list of buckets and keys as well as the index of all objects, object version and object blocks.

Store this folder on a fast SSD drive if possible to maximize Garage's performance.

data_dir

The directory in which Garage will store the data blocks of objects.

This folder can be placed on an HDD. The space available for data_dir

should be counted to determine a node's capacity

when configuring it.

block_size

Garage splits stored objects in consecutive chunks of size block_size

(except the last one which might be smaller). The default size is 1MB and

should work in most cases. If you are interested in tuning this, feel free

to do so (and remember to report your findings to us!). If this value is

changed for a running Garage installation, only files newly uploaded will be

affected. Previously uploaded files will remain available. This however

means that chunks from existing files will not be deduplicated with chunks

from newly uploaded files, meaning you might use more storage space that is

optimally possible.

replication_mode

Garage supports the following replication modes:

-

noneor1: data stored on Garage is stored on a single node. There is no redundancy, and data will be unavailable as soon as one node fails or its network is disconnected. Do not use this for anything else than test deployments. -

2: data stored on Garage will be stored on two different nodes, if possible in different zones. Garage tolerates one node failure before losing data. Data should be available read-only when one node is down, but write operations will fail. Use this only if you really have to. -

3: data stored on Garage will be stored on three different nodes, if possible each in a different zones. Garage tolerates two node failure before losing data. Data should be available read-only when two nodes are down, and writes should be possible if only a single node is down.

Note that in modes 2 and 3,

if at least the same number of zones are available, an arbitrary number of failures in

any given zone is tolerated as copies of data will be spread over several zones.

Make sure replication_mode is the same in the configuration files of all nodes.

Never run a Garage cluster where that is not the case.

Changing the replication_mode of a cluster might work (make sure to shut down all nodes

and changing it everywhere at the time), but is not officially supported.

compression_level

Zstd compression level to use for storing blocks.

Values between 1 (faster compression) and 19 (smaller file) are standard compression

levels for zstd. From 20 to 22, compression levels are referred as "ultra" and must be

used with extra care as it will use lot of memory. A value of 0 will let zstd choose a

default value (currently 3). Finally, zstd has also compression designed to be faster

than default compression levels, they range from -1 (smaller file) to -99 (faster

compression).